Gradient Descent and Cost

- 24-07-2022

- chuong xuan

- 0 Comments

Why is Gradient Descent important in machine learning? The algorithm performs iteratively to optimize the loss function. Loss function describes how well the model will perform with extra numbers (weights and biases), gradient descent is used to find the best parameter. Example updating parameters for Linear Regression or weights in neural network.

Mục lục

What is Cost Function?

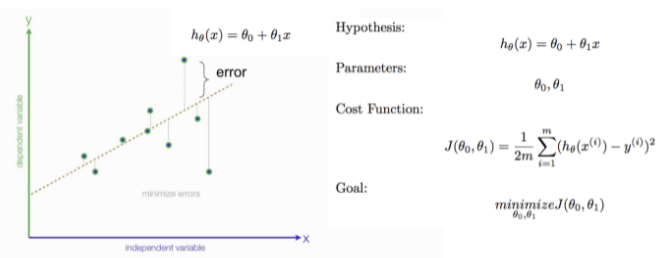

The cost function (or loss function) helps to determine the cost to measure the predictability of the output on the test set. The goal is to find weights and biases that minimize costs. And use MSE (mean squared error) measure the difference between the true value of y and the predicted value. The regression equation is a straight line of the form hθ( x ) = θ + θ1x, where weight (θ1) and bias ( θ0 ) .

Cost function optimization.

Almost the main goal of Machine Learning models is to help optimize costs.

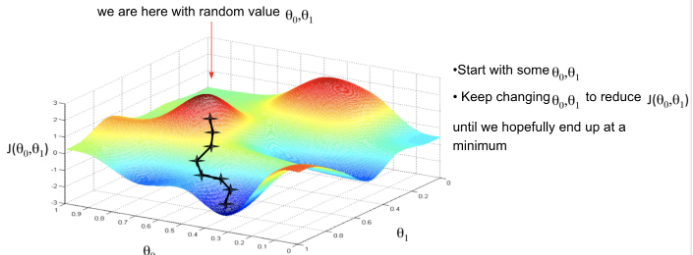

The idea is to bring the red peaks to the blue lows. To do that, we need to adjust the weights θ0 and θ1.

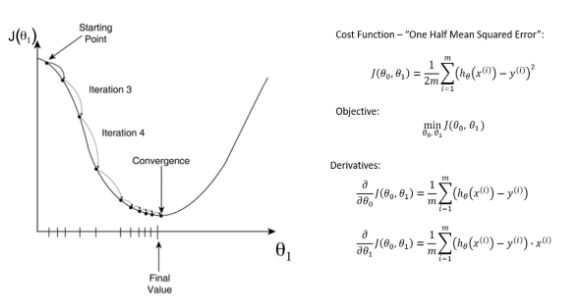

Gradient Descent runs iteratively to find the optimal values of the corresponding parameters to help optimize the cost function. Mathematically, the derivative helps us to minimize the cost function and helps to determine the minimum point.